After trying to find a good enough solution for DNS serving, I figured that I am simply not satisfied with the current ecosystem of Linux self-hosting. Some give you a choice of the software to use for specific services, if at all, and then abstract the interface to some extent.

My problem with ALL of them is that they are simply assuming you want to use known software and just simplify the management. Well, if they have done a better job, yeah, sure. I am not saying they are doing a bad job, it is simply not enough anymore, and the maintainers of the free-source solutions simply don’t get paid enough, if at all, to advance their solutions.

So, to solve it for my needs, I am retiring op-nslookup and getting back to basics, what am I expecting from my self-hosting solution?

I’ll try answering this first as a whole, and then go into detail.

Expectations

- REST API Management – GUI is nice, but API should be first citizen, all available options in a GUI should be actions I can perform using the API

- Configurability – Every behavior of a service should be configurable

- Best Practices – Every configuration should have default values that are considered to be best practice, allow bad behavior for whatever reason, but document and produce appropriate log messages

- Security – Every user interaction should go through security assertions, insecure configurations should be documented and produce appropriate log messages

- Usability – Having said the above, the services should be easily usable, for example, while spf2 configurations might be more secure, it makes emails unusable in most common cases

- Interoperability – While I will be proud to have my own full-blown ecosystem, I am not operating in a vacuum, so RFCs are an obvious, so I am talking about supporting common configuration files and ubiquitous language, no reason to reinvent the wheel, but making a better version might be an interesting journey

- One ring to rule them all – Have a single source of truth for information about shared settings, for example, serving a domain in DNS, Email and Web, the settings should be in one place, the DNS, MX and HTTP services should derive their internal configurations from the single source on-change

Details – TBD

I’ll dive into each service in a future post

PoC – Email

I have already started working on an Email service, Python based, using the Twisted framework. I tried looking for other frameworks in the past, and I was hoping the async ecosystem in Python has something better, but so far Twisted is the only encompassing solution for network based solutions, and it already has very good separate implementations.

Since Email is one of my familiar fields (after DNS) I am starting with a really simple local submission server (no authentication, only acceptance), the goal of the PoC:

- Support domains

- Support users

- Support aliases

- Support domain catch-all

- Support local delivery

- Support server catch-all

- Support delayed rejections (accept the message, then based on rules, send back a rejection to the sending address or to a postmaster)

- Support two delivery backends

- Filesystem (JSON files)

- SQLite + Filesystem (for message body)

Common services are separated between MSA, MTA, MDA and other acronyms of services with specific jobs, which will probably still be represented in my solutions, but the lines are more blurry the less naive those systems became (SSL / TLS, anti-spam, anti-virus, rate limits etc.). However, I will try to keep the common concepts in place so if you know your postfix / dovecot / exim you will feel almost at home.

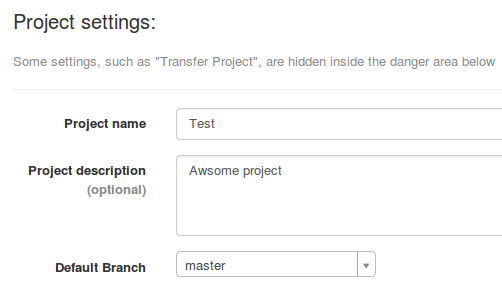

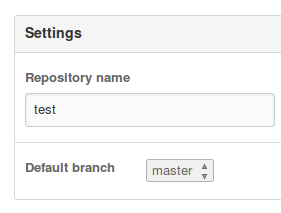

The work is in a repository I started a few years ago: https://gitlab.com/uda/txmx

I will do my best to keep up with my own standards 😉